I just returned from NAACL 2015 in beautiful Denver, CO. This was my first “big” conference, so I didn’t know quite what to expect. Needless to say, I was blown away (for better or for worse).

First, a side note: I’d like to thank the NAACL and specifically the conference chair Rada Mihalcea for providing captions during the entirety of the conference. Although there were some technical hiccups, we all got through them. Moreover, Hal Daume and the rest of the NAACL board were extrememly receptive to expanding accessibility going forward. I look forward to working with all of them.

Since this was my first “big” conference, this is also my first “big” conference writeup. Let’s see how it goes.

Keynote #1: Lillian Lee Big Data Pragmatics etc….

-

This was a really fun and insightful talk to open the conference. There were a few themes within Lillian’s talk, but my two favorite were why movie quotes become popular and why we use hedging. Regarding the first topic, my favorite quote was: “When Arnold says, ‘I’ll be back’, everyone talked about it. When I say ‘I’ll be back’, you guys are like ‘Well, don’t rush!’”

-

The other theme I really enjoyed was “hedging” and why we do it. I find this topic fascinating, since it’s all around us. For instance, in saying “I’d claim it’s 200 yards away” we add no new information with I’d claim.” So why do we say it? I think this is also a hallmark of hipster-speak, e.g. “This is maybe the best bacon I’ve ever had.”

- This paper uses a lightly-supervised method to infer attributes like age and political orientation. It therefore avoids the need for costly annotation. One way that they infer attributes is by determining which Twitter accounts are central to a certain class. Really interesting, and I need to read the paper in-depth to fully understand it.

One Minute Madness

- This was fun. Everyone who presented a poster had one minute to preview/explain their poster. Some “presentations” were funny and some really pushed the 60-second mark. Joel Tetreault did a nice job enforcing the time limit. Here’s a picture of the “lineup” of speakers.

Nathan Schneider & Noah Smith A Corpus and Model Integrating Multiword Expressions and Supersenses

- Nathan Schneider has been doing some really interesting semantic work, whether on FrameNet or MWEs. Here, the CMU folks did a ton of manual annotation of the “supersense” of words and MWEs. Not only do they manage to achieve some really impressive results on tagging of MWES, but they also have provided a really valuable resource to the MWE community in the form of their STREUSLE 2.0 corpus of annotated MWEs/supersenses.

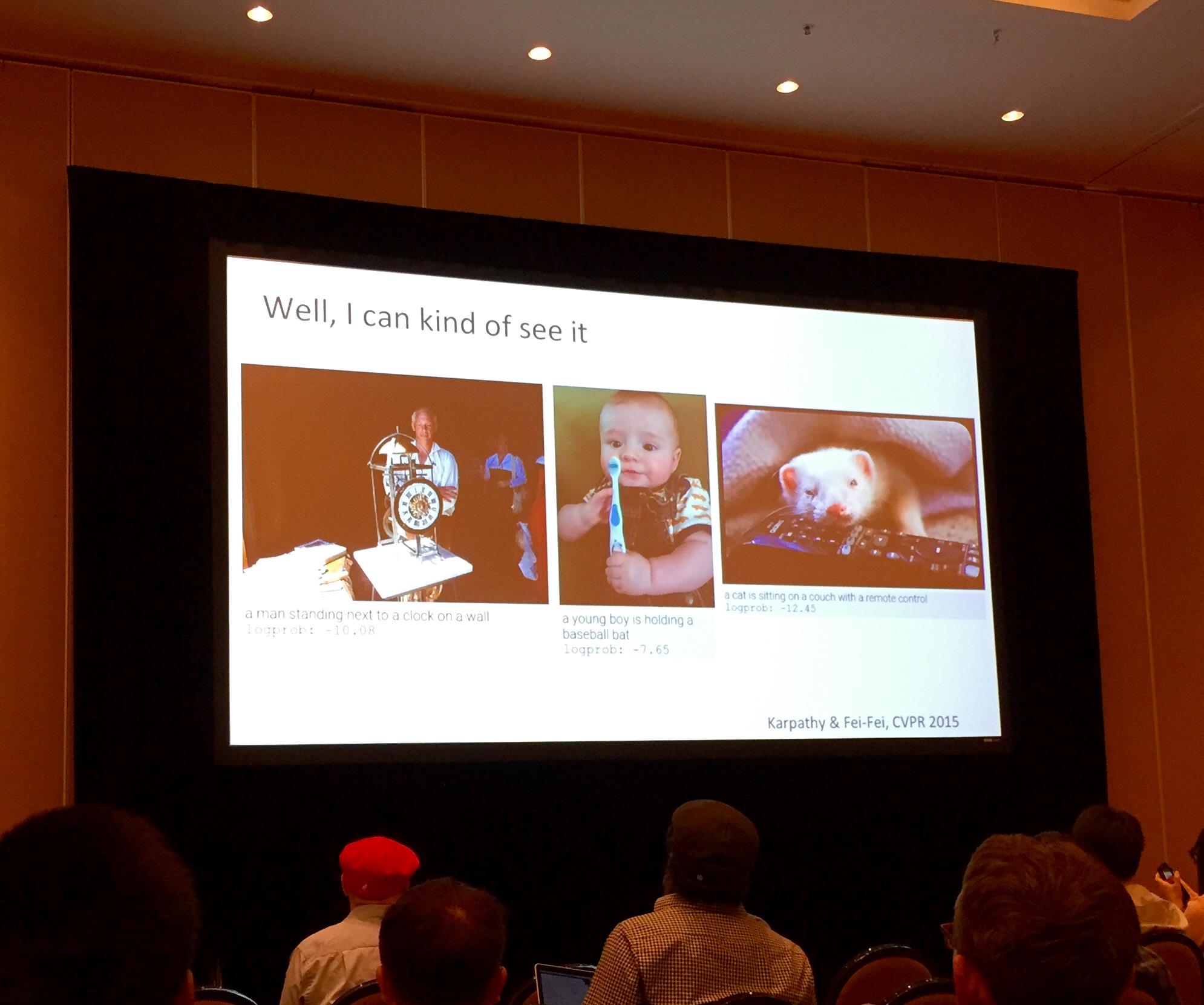

Keynote #2: Fei-Fei Li A Quest for Visual Intelligence in Computers

- This was a fascinating talk. The idea here is to combine image recognition with semantics/NLP. For a computer to really “identify” something, it has to understand its meaning; pixel values are not “meaning.” I wish I had taken better notes, but Fei-Fei’s lab was able to achieve some incredibly impressive results. Of course, even the best image recognition makes some (adorable) mistakes.

Manaal Faruqui et al. Retrofitting Word Vectors to Semantic Lexicons

- This was one of the papers that won a Best Paper Award, and for good reason. It addresses a fundamental conflict in computational linguistics, specifically within computational semantics: distributional meaning representation vs. lexical semantics. The authors combine distributional vector representation with information from lexicons such as WordNet and FrameNet, and achieve significantly higher accuracy in semantic evaluation tasks from multiple languages. Moreover, their methods are highly modular, and they have made their tools available online. This is something I look forward to tinkering around with.

Some posters that I really enjoyed

- Oracle and Human Baselines for Native Language Identification – Shervin Malmasi, Joel Tetreault and Mark Dras

- Lexicon-Free Conversational Speech Recognition with Neural Networks – Andrew L. Maas, Ziang Xie, Dan Jurafsky, Andrew Y. Ng

- Using Zero-Resource Spoken Term Discovery for Ranked Retrieval – Jerome White et al.

- Recognizing Textual Entailment using Dependency Analysis and Machine Learning – Nidhi Sharma, Richa Sharma and Kanad K. Biswas

Overall impressions

- Deep learning and neural nets are still breaking new ground in NLP. If you’re in the NLP domain, it would behoove you to gain a solid understanding of them, because they can achieve some incredibly impressive results.

- Word embeddings: The running joke throughout the conference was that if you wanted your paper to be accepted, it had to include “word embeddings” in the title. Embeddings were everywhere (I think I saw somewhere that ~30% of the posters included this is their title). Even Chris Manning felt the need to comment on this in his talk/on Twitter:

RT @aidotech: RT aidotech: Chris actually showing a tweet on his slides! #deeplearning #naacl2015 pic.twitter.com/GWI7rDiQVC

— StanfordCSLI (@StanfordCSLI) June 5, 2015

Takeaways for Future Conferences

- I should’ve read more of the papers beforehand. Then I would have been better prepared to ask good questions and get more out of the presentations.

- As Andrew warned me beforehand “You will burn out.” And he was right. There’s no way to fully absorb every paper at every talk you attend. At some point, it becomes beneficial to just take a breather and do nothing. I did this Wednesday morning, and I’m really glad I did it.

- Get to breakfast early. If you come downstairs 10 minutes before the first session, you’ll be scraping the (literal) bottom of the barrel on the buffet line.

Shameless self-citation: Here is the paper Andrew and I wrote for the conference.